10 main ethical issues of AI today

As we approach significant technological change, it’s essential to weigh the risks and rewards. A Pew Research study found that 79% of experts expressed concerns about the future of AI, touching on potential harms to human development, knowledge, and rights. Only 18% felt more excitement than concern. As AI continues to weave itself into the fabric of our daily lives, understanding its ethical dimensions becomes not just a computer science challenge but a societal one.

From chatbots helping us with our shopping lists to AI-driven marketing campaigns, the line between computer science marvel and ethical dilemma is getting blurrier. Let’s break down the ten main ethical issues of AI today, spiced up with real-life examples.

Bias and discrimination

Imagine an AI recruitment tool that’s been trained on decades of human decisions. Sounds great, right? But what if those human decisions were biased?

This isn’t just a hypothetical scenario. The Berkeley Haas Center for Equity, Gender, and Leadership revealed that out of 133 AI systems analyzed from 1988 to the present day, 44.2% exhibited gender bias, and a significant 25.7% showed both gender and racial bias.

This alarming trend has spurred action, with New York City setting a precedent in 2023. Employers in the city are now prohibited from using AI to screen candidates unless the technology has undergone a “bias audit” in the year preceding its usage. This move aims at fostering fairness and equality in the recruitment process.

Lack of transparency

AI systems, especially in marketing, often operate as “black boxes”, making decisions that even their creators can’t always explain. This lack of transparency can be unsettling. For instance, if an AI in email marketing decides to target a particular demographic without clear reasoning, it can lead to ethical concerns.

Invasion of privacy and misuse in surveillance

Your social media profiles might reveal more about you than you think. AI can mine vast amounts of personal data, sometimes without our knowledge or consent. A machine-learning algorithm might predict your intelligence and personality just from your tweets and Instagram posts. This data could be used to send you personalized ads or even decide if you get that dream job, shaping a future where our online actions dictate real-life opportunities. While this might sound like a plot from a sci-fi movie, it’s a reality today and it doesn’t seem to get better.

Big Brother is watching, and AI is his new tool. Governments and organizations can harness AI for mass surveillance, often breaching privacy rights. Imagine walking into a mall, and an AI system instantly recognizes you, accessing your entire digital footprint. It’s not just about privacy; it’s about the potential misuse of this information. Countering that, the EU Parliament passed the draft of an AI law banning the use of facial recognition technology in public spaces without clear regulations.

Lack of accountability

When AI goes wrong, who’s to blame? Is it the developers, the users, or the machine itself? What if AI decisions lead to negative outcomes, like a healthcare misdiagnosis? Without clear accountability, it’s challenging to address these issues and ensure they don’t happen again.

Copyright questions

Another topic of debate surrounding AI is the use of protected works (illustrations, books, etc.) to train the systems and generate text and images. The AI and copyright questions are at the intersection of law, ethics, and philosophy, which makes them especially complicated to navigate. Still, it is an important matter to consider.

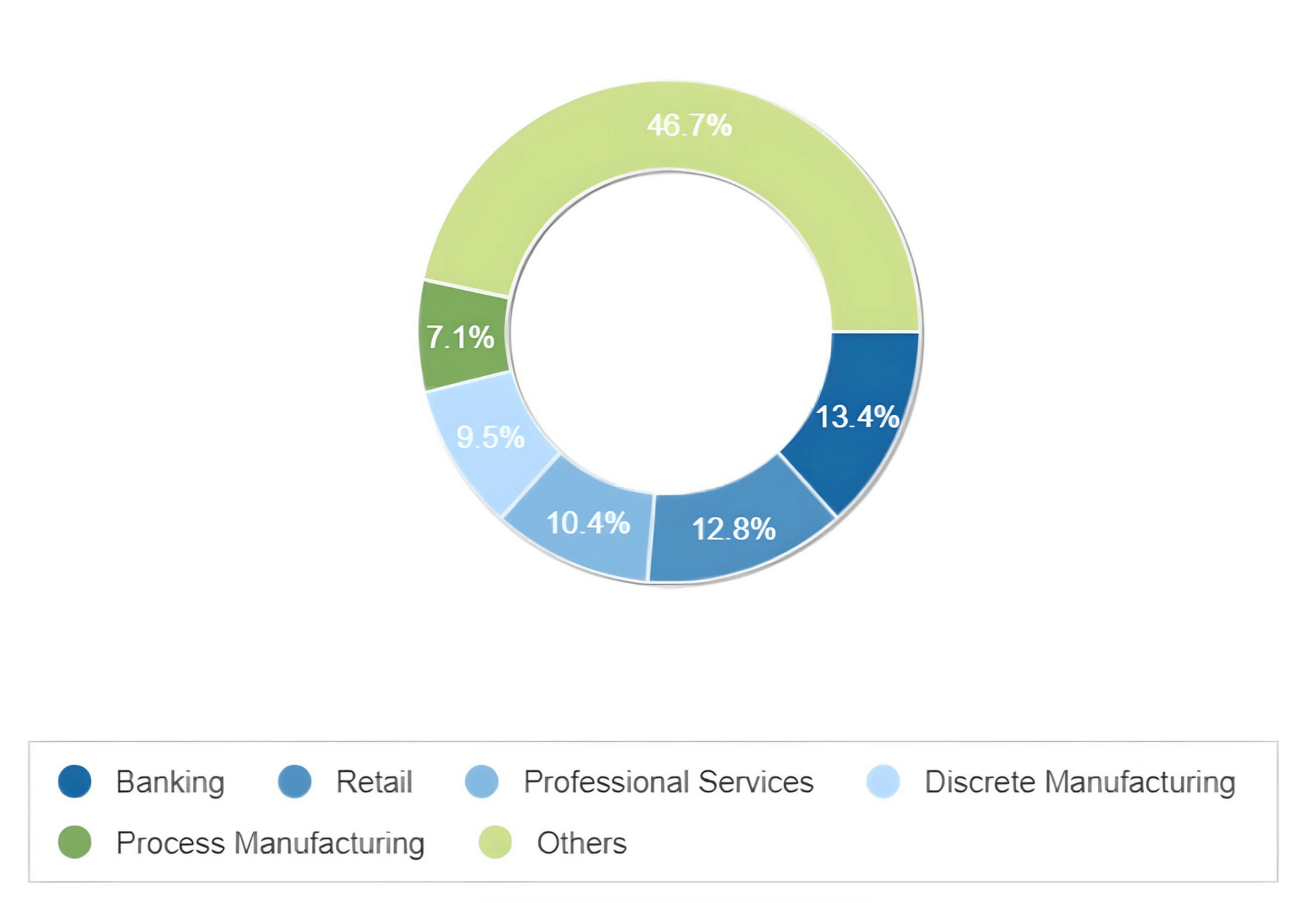

Economic and job displacement

Picture this: factories are humming with robots, not humans. Sounds efficient, right? But what happens to John, Jane, and thousands of others who used to work there? As AI gets smarter, many jobs, especially the repetitive ones, are on the chopping block. Automation in sectors like manufacturing is leading to significant job losses. So, while AI might be a boon for efficiency, it’s also a bane for employment in certain sectors.

Misinformation and manipulation

Ever stumbled upon a video of Tom Cruise goofing around, only to realize it was all smoke and mirrors? Welcome to the global phenomenon of deepfakes, where AI tools have the power to craft hyper-realistic videos of individuals saying or doing things they never actually did. This technology has opened up Pandora’s box, making it possible to generate misinformation at an unprecedented scale, not just through written words but through convincing visuals too.

In a startling case that shook the internet, two US lawyers and their firm were slapped with a $5,000 fine for leveraging ChatGPT to create and submit fake court citations. This AI tool went as far as inventing six non-existent legal cases, showcasing a dark side of AI where misinformation is a tangible reality affecting serious legal proceedings.

Safety concerns

AI technology can sometimes remind a toddler — unpredictable and needing constant supervision. While it has the power to generate groundbreaking tools and solutions, it can also act in unforeseen ways if not properly managed. Consider the global buzz around autonomous vehicles. Can we fully trust AI to take the wheel in critical situations, or should there always be a human overseeing its actions to ensure safety?

Recall the tragic incident in Arizona during Uber’s self-driving experiment that led to fatal consequences for an innocent pedestrian. Similar concerns have been raised with Tesla’s autopilot feature. These incidents bring us to a pressing question in the business and technology world: How can we strike the right balance between leveraging AI’s potential and maintaining safety? It’s a discussion that goes beyond just holding someone accountable; it’s about safety measures and a culture where AI and human oversight work hand in hand to avoid possible mishaps.

Ethical treatment of AI

Here’s a brain teaser for you: if an AI can think, feel, and act like a human, should it be treated like one? It’s not just a philosophical question; it’s an ethical one. As AI systems evolve, there’s a growing debate over their rights. Are they just sophisticated tools or entities deserving of respect? While Google simply fired an engineer who was certain its AI had been sentient, questioning whether advanced AI systems should have rights is fair.

Over-reliance on AI

Trust is good, but blind trust? Not so much. Relying solely on AI, especially in critical areas, can be a recipe for disaster. Imagine a world where AI is the sole decision-maker for medical diagnoses. Sounds efficient, but what if it’s wrong? Think of a GPT-3-based chatbot’s suggestions to commit suicide during a mock session. AI definitely needs human oversight.

To wrap it up, while AI is revolutionizing sectors like email marketing (shoutout to the chatGPT copywriting study!), it’s also opening a Pandora’s box of ethical issues. From job displacement to the rights of AI, the ethics of artificial intelligence is a hot topic that’s not cooling down anytime soon. So, as we embrace AI, let’s also ensure we’re navigating its ethical landscape with care. After all, it’s not just about what AI can do, but what it should do.