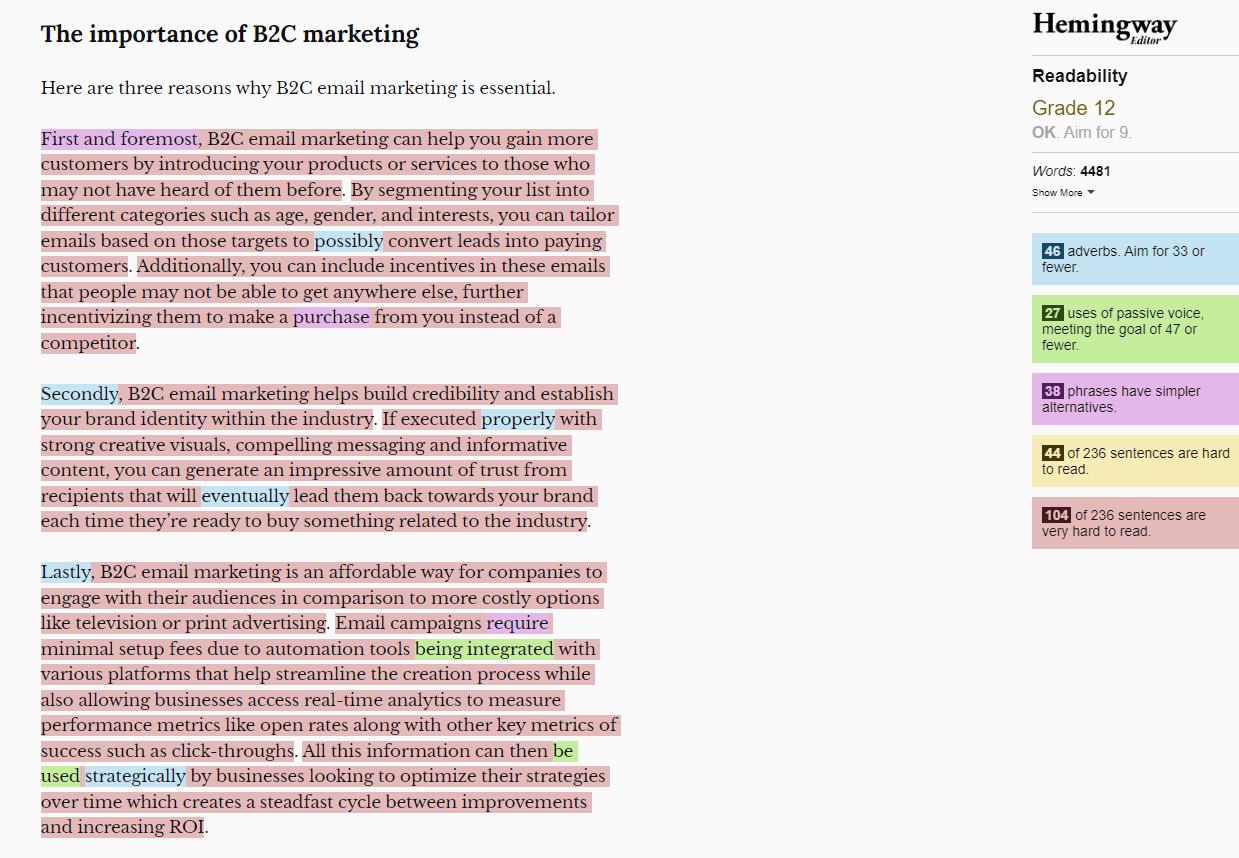

She commented DALL·E’s output like this: “What I find interesting is how vaguely, recognizably correct they manage to be, while also being utterly wrong”. Which is funny, because ChatGPT’s attempts to educate us on B2C email marketing give the same impression. For example, let’s get back to the article’s conclusion I mentioned earlier.

| In conclusion, B2C email marketing has proven to be an effective tool for businesses looking to reach their target audience. <…> Additionally, it provides marketers with powerful analytics about customer segmentation, subscriber behavior, and other key insights. |

Does it though? Are analytics exclusive to email marketing only? Are customer segmentation and subscriber behavior insights? This sentence looks like a set of buzzwords with no substance behind it — that was the main issue I recognized during fact checking. Take a look at another example — here’s how ChatGPT thinks you should set goals for a B2C email campaign:

- Define Your Target Audience: <…>

- Set S.M.A.R.T. Goals: <…>

- Increase Traffic to Your Website: <…>

- Boost Sales Conversions & Re-engagement: <…>

- Increase Brand Awareness: <…>

|

It’s not that increasing traffic, boosting sales, or raising brand awareness is incorrect and off-topic. The problem is, the paragraph is about how to identify campaign goals not possible variants of campaign goals. Meanwhile, ChatGPT treats these goal types like steps in a tutorial, which is obviously wrong.

Let’s take a look at the next paragraph, which is about list building.

- Create a B2C Sign Up Form: <…>

- Incorporate Routinely <…>

- Leverage Your Social Media Pages <…>

- Run Contests & Giveaways <…>

- Network Online <…>

- Segment Your List <…>

|

The H3 of this paragraph was “Build and segment your email list”. List building and segmentation are different entities that need separate explanation. What ChatGPT did was literally answering “Segment your list” to “How to build and segment an email list?”. Even worse, this paragraph has an actual mistake. Networking for people in your industry is a B2B tactic and it has nothing to do with building lists for B2C campaigns.

Here’s one more example of poor AI instructions — this one is about launching an email campaign:

- Create Your Messaging <…>

- Identify Your Target Audience <…>

- Design Your Email Template <…>

- Put Together Your Contact List <…>

- Set Up A/B Testing Methods: <…>

- Send Out & Monitor Performance: <…>

|

Structure-wise, list building, email design and writing were already mentioned in previous paragraphs. But the “best” part is ChatGPT’s definition of A/B testing:

| A/B testing makes it easy to measure which of two versions is better at achieving the goal of any digital marketing activity such as an email campaign – visits, leads, registrations or purchases etc., by allowing users to control different elements in each version and compare results afterwards |

It’s not really a measurement facilitation, A/B testing is literally the comparison of two emails. The “makes it easy to measure” bit would be correct if ChatGPT was talking about data analysis methods like Pearson’s Chi Square. Secondly, the “users” bit is confusing to the point I thought about emails that subscribers themselves can customize.

Here’s the final example — this one is about the sense of urgency:

| Using a sense of urgency in B2C email marketing campaigns helps to motivate consumers to take action faster. It encourages them to purchase goods before the offer expires, thus moving them closer to becoming customers and increasing sales. |

Not that it’s blatantly incorrect. The problem is that none of that should have been said in the first place — it’s too obvious. Basically, the whole article mostly consists of claims like this — vaguely correct, right words in an unhinged order and context, overexplaining, repeating the same ideas, and so on.

The final rating: ⭐⭐⭐

The final commentary: We’ve reached the uncanny valley of factual correctness.