Dark patterns and common compliance guidelines

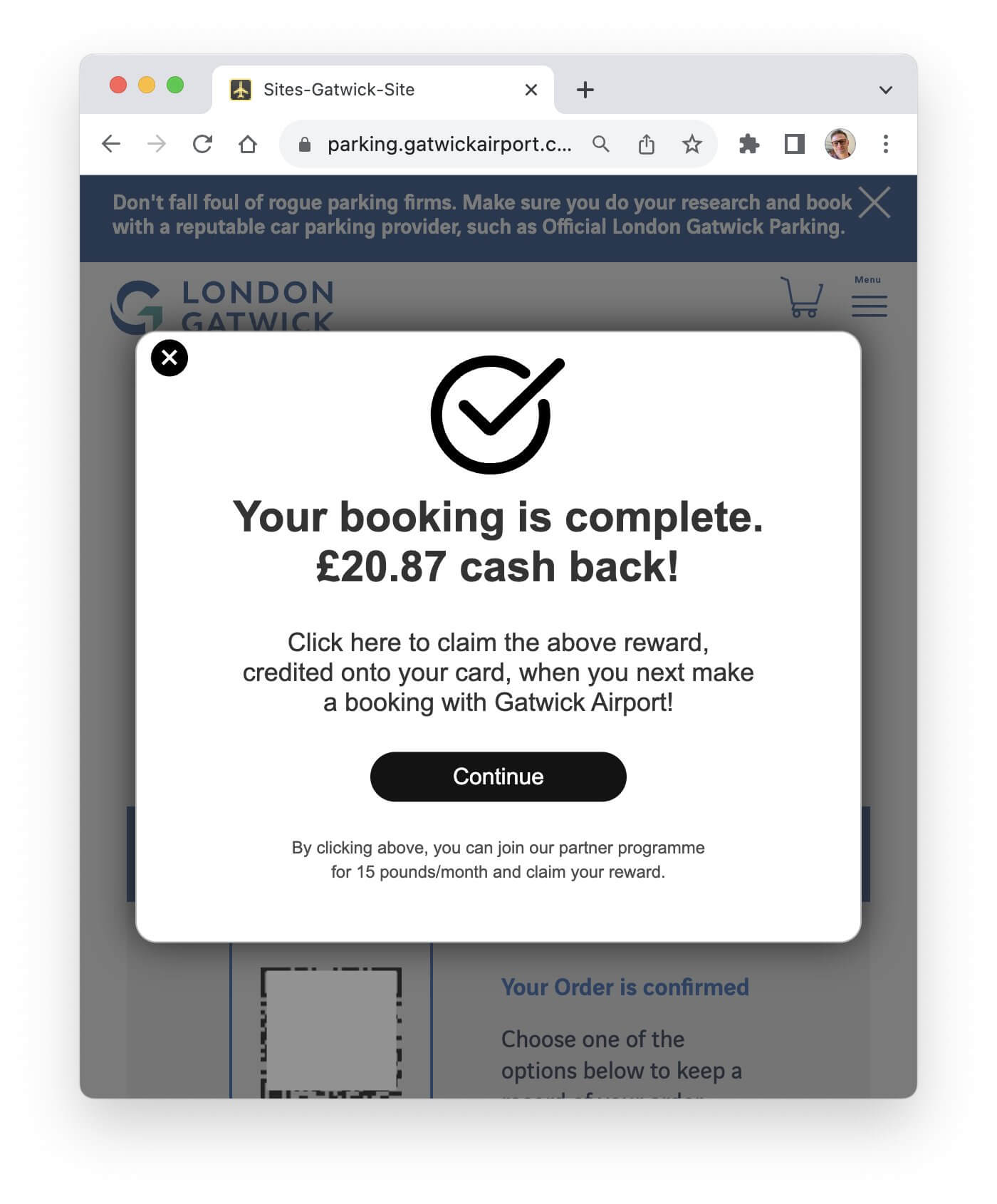

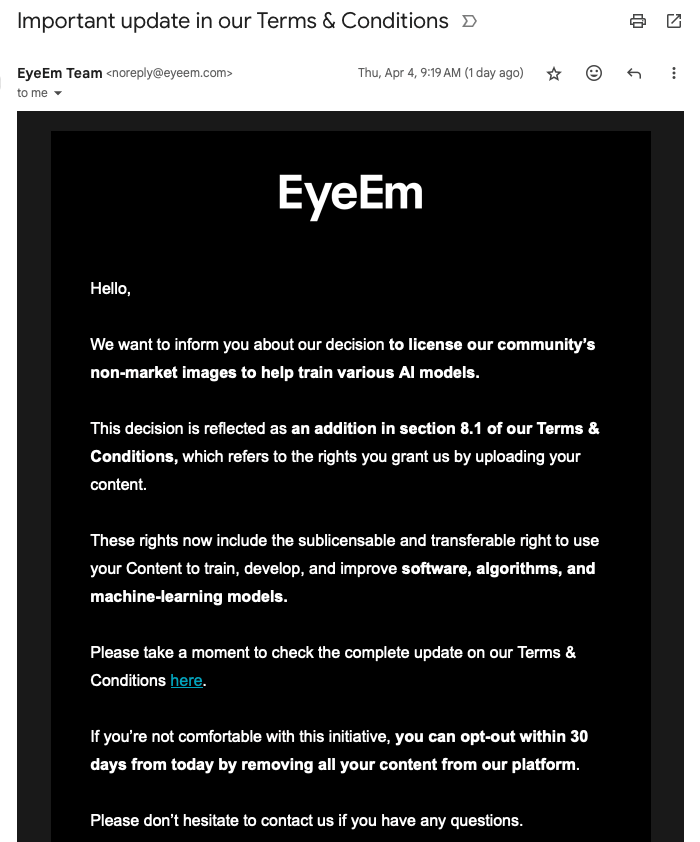

While many businesses agree that nothing called “dark” can be good, the internet is still full of it — incorrect use of personal information by social media platforms and email marketing gone wrong with dark pattern tricks.

This leads us to the subject of consumer protection and trade commissions, specifically the federal beasts. Acts with prominent capital letters, such as GDPR, CPRA, and COPPA, demonstrate that the FTC opposes this viral style of deception. Even massive corporations are not immune to prosecution. For example, the French data protection authorities penalized Google and Meta $170 million and $68 million, respectively in 2022. But let’s dig deeper.

GDPR and EDPB guidelines compliance

The General Data Protection Regulation (GDPR) is a set of regulations that member states of the European Union and those who do business in the EU must adhere to to protect the privacy of digital data, including GDPR email compliance. The European Data Protection Board (EDPB) is responsible for ensuring that the GDPR is consistently applied across the EU.

The EDPB’s recent guidelines even address social media platforms, urging them to avoid deceptive design practices that could mislead users into making privacy-endangering decisions without clear consent.

EDPB regularly releases binding decisions that fine companies that are not GDPR compliant and force them to follow the guidelines. For example, in 2023 it imposed a fine of €345 million (approximately $374 million) on TikTok for unfairly processing the personal data of children between 13 and 17.

CCPA and CPRA compliance

Across the pond, California addresses user privacy with the California Consumer Privacy Act (CCPA) and the California Privacy Rights Act of 2020 (CPRA), which grant California residents the right to know what personal information is gathered about them, how it is used, and with whom it is shared. These laws also include rules against dark patterns.

The rules forbid actions that obstruct the opt-out process and demand simplicity for consumers who wish to do so. This covers banning ambiguous language or complicated navigation that could lead users to give permission unintentionally.

CCPA compliance is regularly enforced, and no company, even an international giant, is immune. For instance, in 2022, Sephora agreed to $1.2 million in penalties for sneakily selling customers’ data.

FTC Act and fines

The US government shows its resolve to take action against companies that employ dark patterns by releasing a paper listing many deceptive techniques employed across several industries.

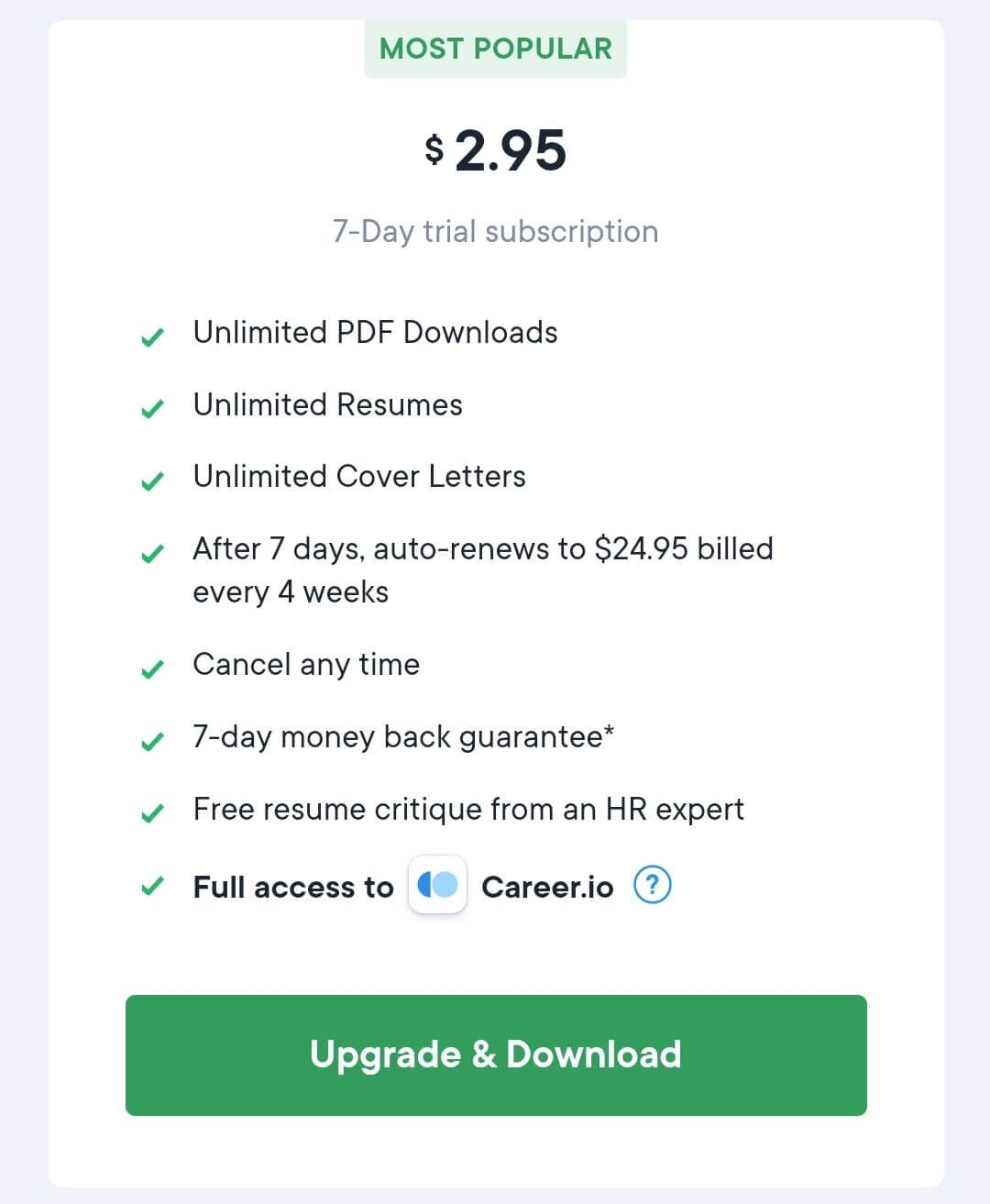

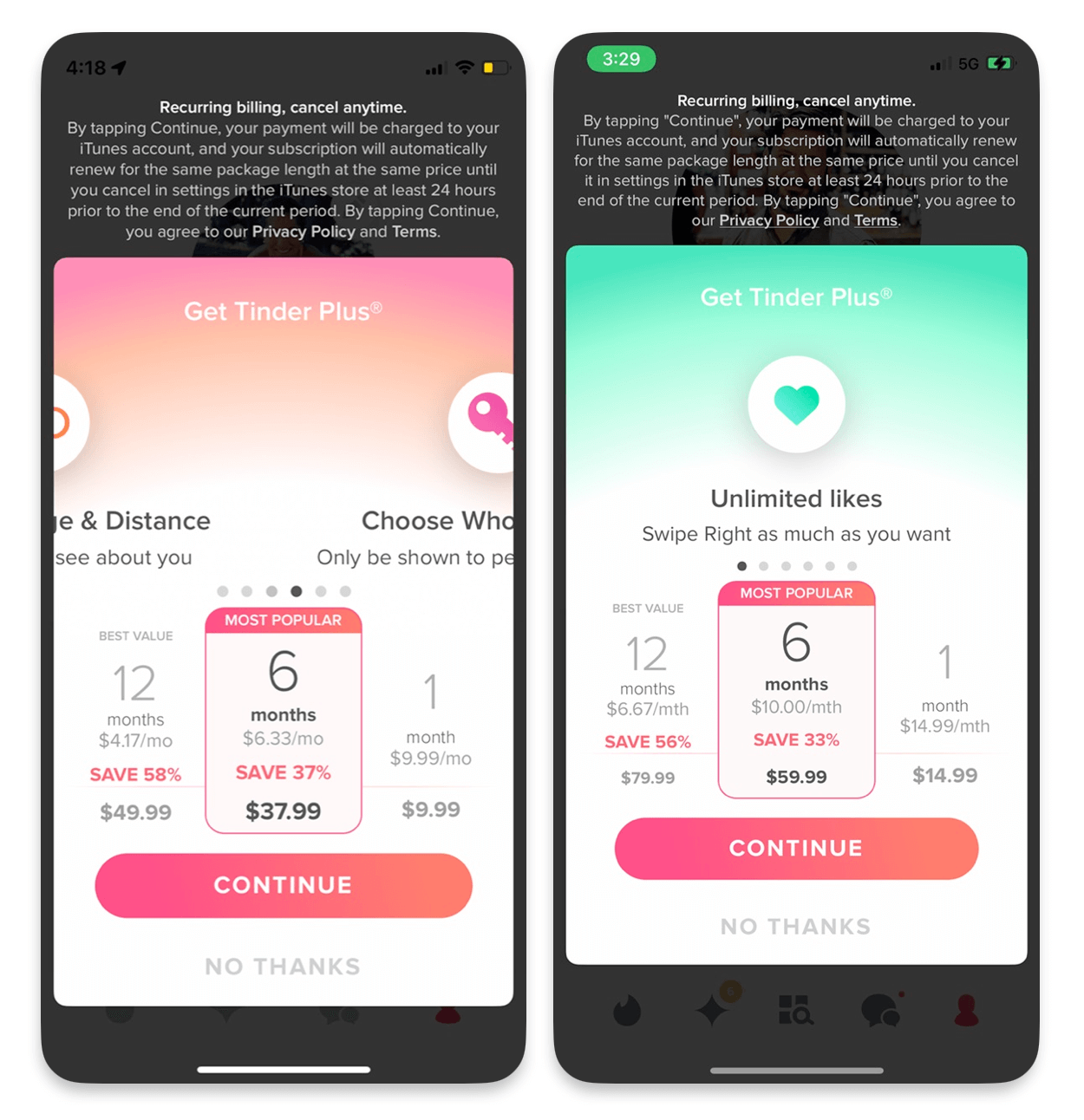

Here’s a breakdown: The Federal Trade Commission asks businesses to do three basic things:

- Disclose all terms of any transaction.

- Make it so simple to cancel that even a toddler can do it.

- Eliminate ambiguous language.

Sounds simple, right? It does not appear to be so for everyone. Just look at Amazon. These people won the prize for non-consensual Prime subscriptions and a complicated cancelation process. The FTC was not amused. Result? A quick punch of legal action coming their way.

And let’s not forget about Epic Games making Fortnite fans pay for something they didn’t want. The FTC fined them $245 million.

Another example of the FTC’s swift action is the case of Vonage, the communications company that had very complicated cancellation procedures, so much so that it cost them $100 million.

Not to mention a famously huge $5 billion fine for Facebook: